The classification of standards clusters into foundational and contextual standards helps us reply two key questions: “what’s the evaluation of the standard of the software itself no matter its context” and “what’s the evaluation of the potential affect of the software in a selected setting given its contextual match?”. This sociotechnical strategy, which has at its core the concept that the design and efficiency of any innovation can solely be understood and improved if each ‘social’ and ‘technical’ elements are introduced collectively and handled as interdependent components of a posh system36, goes past assessing remoted applied sciences and takes their supposed context into consideration. On the similar time, classifying the standards into foundational and contextual makes the analysis course of extra environment friendly for assessors appraising a software for a couple of potential context, as they solely need to repeat the contextual evaluation for every new context, whereas the evaluation for the foundational standards stays the identical. It’s value noting, nonetheless, that the velocity at which applied sciences are growing requires periodic revisions of the evaluation of the foundational standards to mirror how the software being evaluated evolves as know-how progresses.

Determine 7 demonstrates this classification logic and differentiates between evaluation standards which might be must-have as a result of they met the skilled consensus and the nice-to-have extra guidelines that encompasses standards that didn’t meet the consensus. The inclusion of the extra nice-to-have guidelines was on account of the truth that all standards that didn’t meet the consensus (besides for 2 standards) had a consensus stage of greater than 50%, which implies that at the least each different skilled rated it as very related or extraordinarily related (4 or 5 on the Likert scale). This signifies that contemplating these extra standards should still make sense in some instances, even when they aren’t thought of as vital requirement.

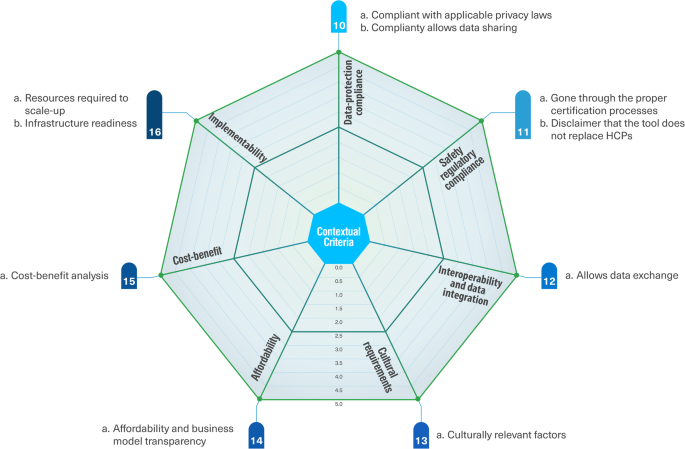

The foundational standards embody 9 clusters: technical elements, medical utility and security, usability and human centricity, performance, content material, information administration, endorsement, upkeep, and the developer. The contextual standards embody seven clusters: data-protection compliance, security regulatory compliance, interoperability and information integration, cultural necessities, affordability, cost-benefit, and implementability. A few of these clusters could embody a couple of subcriterion.

Contemplating the substantial range of eHealth instruments, their use instances, integration stage, and security threat stage, you will need to be aware that some evaluation standards could not apply to all instruments. Nevertheless, research have proven that it might probably show fairly difficult to categorise in response to current categorization as a result of lack of standardization on this space37. We targeted on security threat categorization in response to the NICE proof requirements framework21, provided that security is among the key priorities when assessing these instruments. Determine 8 exhibits the foundational standards and Fig. 9 exhibits the contextual standards of our proposed sociotechnical framework for assessing patient-facing eHealth instruments in response to our skilled panel’s consensus.

The proposed evaluation instrument with a full description of every evaluation criterion, tangible examples of assess it, the chance tier it applies to, and extra steerage for assessors can be found in Supplementary Reference 1 within the Supplementary Data file. Tables 2 and 3 summarize the definitions of the foundational and contextual standards clusters respectively. The interactive evaluation instrument will likely be out there for obtain on the mission web site38. For every criterion, the assessor may comply with an in depth description and examples to evaluate whether or not the criterion was met (5/5), partially met (2.5/5), or not met (0/5). For some standards, the evaluation choices had been binary, both met or not met. As an example, when assessing whether or not a software has undergone correct certification processes, this criterion can’t be partially met. An possibility of (not relevant) was added for standards that could be optionally available for some instruments. For instance, the criterion that assesses whether or not the software has the power to foster interplay between healthcare professionals and their sufferers could not apply for autonomous instruments that had been designed for use independently. For criterion clusters that embody a couple of sub-criterion, the imply rating is calculated to mirror the common evaluation of this cluster. Imply scores are utilized in alignment with the acquainted format of star rankings and comparable evaluation scales39; they’re additionally healthier for function in comparison with complete scores, as a result of some standards could also be assessed as not relevant in particular instances.

When related, assessors are given extra sources to help their analysis by offering extra particulars about high quality requirements for this particular criterion. As an example, medical proof is among the standards within the foundational cluster (medical utility and security), which can require deeper examination by using extra requirements tailor-made for that particular space. On this instance, the steerage consists of extra particulars on the guidelines of the proof high quality standards of the proof DEFINED (Digital Well being for EFfectiveness of INterventions with Evaluative Depth) framework40. Such extra sources intention to assist the assessor achieve extra insights into assess the standard of the criterion, notably in instances the place they might not have sufficient expertise on this particular area.

We methodically mirrored on the appreciable challenges going through eHealth evaluation efforts that we recognized in our foundational work18. Whereas a few of them had been past our management (e.g., info availability and regulatory complexity), we targeted our efforts on making an attempt to deal with a few of these key challenges, specifically evaluation standards validation with supposed customers and anxious events to mirror their real-life wants, guaranteeing assessor range in our skilled panel to mirror a large breadth of views, contemplating healthcare contextuality, discussing methods to deal with subjective measures, and practicability of the proposed evaluation instrument to make it as accessible and usable as attainable.

The skilled panel helped us validate the evaluation standards, and contributors’ range was instrumental in reflecting the completely different priorities and views of all related involved events. The preliminary record of standards included contextual standards that the specialists validated and confirmed as must-have within the ultimate framework. Specialists had been additionally requested to counsel extra evaluation standards that they deemed essential. These new standards had been validated within the second-round survey to make sure the completeness of the ultimate record of standards. Our discussions with the specialists went past finalizing the record of evaluation standards to embody the practicability and relevance of the proposed evaluation instrument to make it as accessible and usable as attainable to related resolution makers.

The primary problem we mentioned with the specialists was the contextuality of among the evaluation standards. Analysis has proven that contextual components that transcend the software getting used itself, resembling implementation prices, medical workflows, required sources and infrastructure, and a affected person’s traits and socioeconomic standing, sometimes play a serious position in eHealth acceptance and adoption41,42,43,44,45,46. This contextual nature of healthcare makes engagement with eHealth instruments considerably difficult when contextual consciousness will not be thought of47. Nevertheless, though there are some requirements for assessing a software’s usability or scientific proof, there appears to be a spot in pointers supporting the analysis of their implementation and processes8, leading to a spot in contextual evaluation standards.

Whereas quite a few evaluation initiatives and frameworks solely concentrate on assessing the software itself and don’t take the healthcare context into consideration, we advocate for the inclusion of contextual standards (e.g., readiness of the native infrastructure, required sources for scale-up, cost-benefit evaluation, reimbursement requirements, and cultural elements like native language), as these have confirmed to tremendously affect the adoption and scale-up of such instruments3,41,43. The overwhelming skilled consensus on the inclusion of contextual standards (55/55, 100%) confirmed their significance and relevance for a complete evaluation.

The second problem was using a single rating versus a scorecard for the evaluation outcomes. Some evaluation initiatives that target curating, certifying, or accrediting eHealth instruments try to make use of a single-score strategy to suggest high quality, with the purpose of creating it simple for potential clients to check instruments and differentiate between low- and high-quality choices. Whereas these initiatives actually have deserves in advancing evaluation efforts, students argue that they might not present an adequately clear course on the simplest instruments that meet sure necessities to greatest combine into a selected healthcare context13,16. Therefore, a scorecard strategy could also be extra appropriate for context-specific evaluations that contain a number of involved events13.

Knowledgeable consensus confirmed that the scorecard was a extra balanced approach of presenting the evaluation outcomes, with (43/55, 78%) of the specialists favoring this strategy. Specialists have argued {that a} single rating could obscure essential particulars, and that the actual worth of the evaluation instrument is about understanding the breakdown. This breakdown will be helpful in understanding the precise strengths and weaknesses of the software being assessed. Some specialists (12/55, 22%) prompt that, ideally, the evaluation outcomes could be introduced as a mixture of a scorecard and a composite rating that offers extra weight to the evaluation standards that the assessor defines as a key precedence for his or her particular context to make comparability simpler if the assessor is evaluating a number of instruments on the similar time.

The third problem we mentioned was about balancing the evaluation automation with AI versus a proactive appraisal strategy that requires testing the software. The most recent developments in Synthetic Intelligence (AI) have enabled a number of appraisal initiatives to make use of subtle AI fashions to guage eHealth instruments by scanning publicly out there info to type a primary high quality evaluation. Though this strategy could allow the evaluation of enormous numbers of instruments in a comparatively brief time, one of many dangers of this mass appraisal strategy is that it might favor instruments with subtle advertising efforts and a cultured public picture, which can not essentially supply superior medical utility. One other problem is lack of knowledge18,48. A earlier examine confirmed that about two-thirds of eHealth suppliers offered no details about the software itself, nor in regards to the credentials of builders or consultants, and solely 4% offered info supporting its efficacy49.

Due to this fact, we suggest a proactive strategy to appraisal that requires hands-on trial of the software and getting in contact with the developer, if crucial, to have a extra full and in-depth evaluation of the standard of the software. This strategy entails extra engagement and energy from the assessors’ facet however would end in extra in-depth insights into the precise strengths and weaknesses of the software being evaluated, main to raised and extra knowledgeable selections. The necessity for testing and hands-on trials to correctly assess an eHealth software has been really helpful by different researchers16,48; some have even really helpful utilizing a software for a interval of at the least two hours throughout greater than sooner or later earlier than reaching a ultimate analysis11. Earlier research have additionally prompt standards and questions that physicians could straight ask eHealth suppliers to achieve an entire understanding of the software earlier than adopting or endorsing it to their sufferers5.

A small variety of specialists (4/55, 7%) disfavored the proactive strategy, arguing that it required an excessive amount of time and effort, which can discourage assessors from utilizing the proposed evaluation instrument. Nevertheless, most specialists (36/55, 65%) favored it, foreseeing {that a} correct and in-depth evaluation actually requires hands-on trial of the software being assessed and getting in contact with the software builders if wanted. Some specialists (10/55, 18%) confirmed the need of a proactive strategy and prompt a blended methodology by which an AI mannequin collects the out there appraisal information that doesn’t require attempting the software, complemented by the assessor’s hands-on evaluation of the standards that necessitate it.

The fourth problem was in regards to the subjectivity of among the evaluation standards. The inclusion of subjective measures has been debated within the literature as it might trigger variability within the evaluation final result relying on the assessors’ subjective views. This was confirmed by earlier analysis, which confirmed that some traits of eHealth instruments are certainly harder to fee constantly11. Low score agreements by among the extensively recognized evaluation initiatives, resembling ORCHA, MindTools, and One Thoughts Psyber Information, aren’t unusual50. A number of students nonetheless strongly suggest the inclusion of subjective standards, resembling ease of use and visible enchantment, regardless of this problem given their significance as elementary adoption drivers41,42,43,51,52. Therefore, integrating subjective standards, resembling consumer expertise analysis, into the assessment course of may enhance software adherence and well being outcomes34. Knowledgeable consensus clearly confirmed this view, with a number of subjective standards assembly the predefined consensus stage.

The specialists really helpful a couple of approaches that will assist reduce the evaluation variability of the subjective standards. Essentially the most outstanding advice was to make sure assessor range (34/55, 62%), thereby contributing to a balanced evaluation that mirrored their completely different views and priorities. This is a crucial issue, because it has been beforehand reported within the literature that some evaluation initiatives don’t essentially contain all related involved events within the analysis11,28. Assessor range can also be essential to make sure balancing of conflicting wants of the completely different involved events, which has been highlighted in earlier analysis that confirmed that typically affected person wants could not at all times be aligned with clinicians’ workflow preferences53. Proof of consumer engagement and co-creation with sufferers and clinicians is one other approach of balancing the views of the completely different involved events within the design54, a criterion that clearly met the skilled consensus (81%).

Analysis proof, resembling usability research, was additionally really helpful (20/55, 36%) to evaluate usability and acceptability. It is very important be aware that not all usability research have equal rigor and high quality. Assessors are suggested to take a better have a look at such research to examine components resembling pattern measurement, range, and rigor of the examine methodology. The readability and specificity of assessor steerage to succeed in a typical understanding of assess these standards (16/55, 29%) was additionally really helpful to reduce the subjectivity of the evaluation.

Utilizing proxy standards resembling buyer rankings was prompt as a workaround to get an concept of a software’s acceptance when rigorous consumer analysis will not be out there (9/55, 16%), with the caveat {that a} crucial mass should be achieved for the consumer score to be thought of. Though consumer score could also be thought of one of many decisive components for potential customers when deciding whether or not to make use of a software, this criterion has been disputed not solely within the skilled panel but in addition within the literature34. Whereas there are research that present a average correlation between consumer rankings and skilled appraisal39, others have reported a weak correlation between the 211. This lack of settlement was additionally mirrored within the skilled consensus on the criterion “seen customers’ critiques” that didn’t meet skilled consensus (44%) and is taken into account a nice-to-have criterion within the proposed evaluation framework. One other proxy criterion prompt by specialists was using a software’s utilization metrics as an goal indication of its usability (4/55, 7%).

The fifth problem was whether or not to allow the optionality of among the standards. Some standards clearly met skilled consensus however had been nonetheless debated within the survey feedback as to whether or not they had been relevant to all sorts of instruments. For instance, the criterion that assesses whether or not the software has the power to foster interplay between healthcare professionals and their sufferers met an 86% consensus. Nevertheless, some specialists argued that it might not apply to autonomous instruments that had been designed for use independently (e.g., for some psychological well being instruments, the interplay with the standard healthcare system is typically not even desired for anonymity and privateness causes).

Nearly all of specialists (39/55, 71%) acknowledged the huge variability of eHealth instruments, which compels the optionality of some standards, regardless that they met the skilled consensus. That is aligned with different evaluation initiatives and score methods that additionally embody an possibility of “not relevant” for standards that could be optionally available for some instruments48.

The sixth problem was about using present versus progressive standards. Some standards had been disputed for being perceived as shortsighted or for hindering innovation. As an example, the protection criterion that assesses whether or not the software accommodates a disclaimer that the knowledge offered doesn’t substitute a well being care skilled’s judgement met 81% consensus for threat tiers B and C. Nevertheless, three specialists favored a extra progressive evaluation framework that dismissed such a criterion, given all of the AI-driven medicines that we’re witnessing as we speak. This progressive stance could have emerged from the ‘fail quick, fail typically’ tradition of know-how startups that always clashes with the advanced healthcare rules, that sometimes end in a extra cautious and sluggish course of, characterised by extra threat aversion guided by the ‘first, do no hurt’ precept16,55.

This stress in balancing security and innovation was acknowledged by the Meals and Drug Administration Commissioner when he admitted that eHealth instruments are growing sooner than the FDA is ready to regulate them56. The overwhelming majority of specialists favored the present standards (50/55, 91%), principally to safeguard affected person security. Most specialists acknowledged that evaluation standards are tied to the present healthcare context, particularly to how progressive the rules and laws are. Because of this it isn’t the standards itself that might be prohibitive, however it’s extra the regulatory panorama and the laws that might be prohibitive on this case, so the standards are sorted in response to the maturity of the general panorama. Accordingly, the evaluation framework and the respective record of standards are more likely to progress over time to mirror the altering applied sciences and rules.

This examine has some limitations. The Delphi course of has some inherent limitations, such because the comparatively small variety of specialists and the potential affect of panel configuration on the findings57,58. Moreover, the proposed framework and evaluation instrument require time and experience, which can be limitations to the engagement of among the involved events within the analysis course of. Detailed coaching supplies and evaluation steerage could assist to mitigate this barrier. Future analysis is required to pilot check the proposed evaluation instrument and decide its accessibility and value throughout completely different involved events, in addition to its adaptability for the instruments underneath improvement (e.g., as a necessities guidelines for builders). Future refinements of the evaluation standards, their definitions, and extra standards will doubtless be required as new applied sciences and eHealth rules evolve.

This sociotechnical evaluation framework goes past the evaluation of remoted applied sciences and considers their supposed context. It helps us reply two key questions: “what’s the evaluation of the standard of the software itself” and “what’s the evaluation of its potential affect in a selected healthcare setting.” The worldwide skilled panel helped us validate the evaluation standards, and contributors’ range was instrumental in reflecting the completely different priorities and views of all related involved events. The proposed evaluation instrument will inform the event of a selected evaluation course of for a single software. It is going to additionally allow side-by-side comparisons of two or extra instruments that are supposed for a similar function. Nevertheless, it doesn’t decide which standards at which rating stage are basically go or no-go standards, as this should be decided by these making use of the instrument based mostly on the context of use and out there alternate options. Broadly, it should assist inform a variety of involved events, together with clinicians, pharmaceutical executives, insurance coverage professionals, traders, know-how suppliers, and policymakers about which standards to evaluate when contemplating an eHealth software. This may information them in making knowledgeable selections about which instruments to make use of, endorse to sufferers, put money into, accomplice with, or reimburse based mostly on their potential high quality and their match into the precise context for which they’re being evaluated.